The work I chose to share here is a small portion of the work that is not under NDA. Additional details and more work is available upon request.

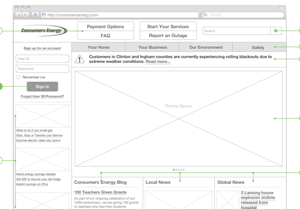

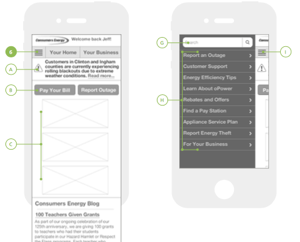

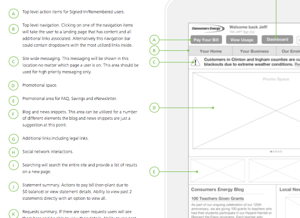

In mid 2012 I was tasked with creating designs in response to an RFP. I was given 2 weeks to reimagine Consumers Energy's existing feature set into a responsive web solution. You will see samples of the wire frames that were included in the RFP below.

Download full PDF

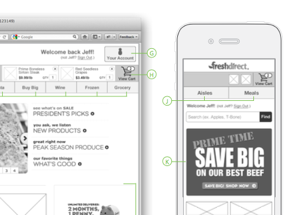

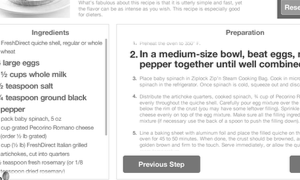

At the end of 2013 I was tasked with creating designs in response to an RFP. I was given 2 weeks to reimagine Fresh Direct's existing feature set into a responsive web solution and accompanying mobile app. You will see samples of the wire frames that were included in the RFP below.

Download full PDF

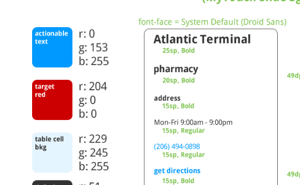

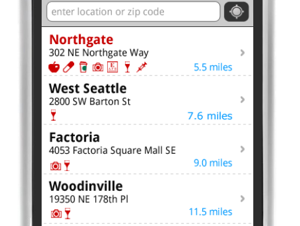

In 2011, Target was the primary client that I worked for. I was responsible for the design of their Android application. Below you will see samples pulled from the pseudo-style guide that I prepared for them.

Download full PDF

In 2012, Allergan was my primary client. I was responsible for designing their mobile sales iPad app. After launch, the proposed training was cut in half due to the ease at which sales reps were learning to use the application. You will see a couple of examples of the wireframes below.

Download full PDF

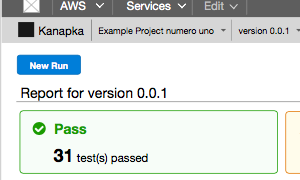

In early 2015, AWS acquired AppThwack, a mobile device testing service. I was tasked with taking their existing UI and redesigning it to fit within the AWS platform while adding some needed UX fixes.

Download full PDF

View research performed for this project

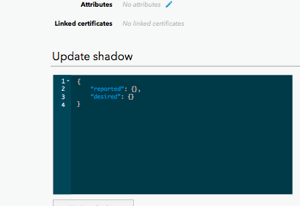

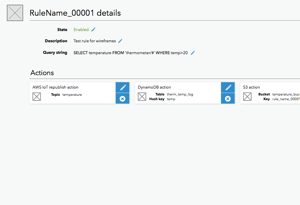

During the holidays in late 2015 I had some extra time and decided to redesign the newly launched IoT service. What was launched did not fit into the AWS platform very well so I used the also recently launched Mobile Hub as a guide for the redesign.

Download full PDF

View the HTML prototype

In late 2014 and early 2015 I worked with the Kinesis team to design and launch the new Firehose service. The service is used to pipe streaming data from a source to a destination without having to build the pipe yourself.

I initially sketched out some ideas and wire framed the initial ideas to get buy-in from the stakeholders. Once we agreed that we were heading in the right direction, I began building an HTML prototype to better communicate the intracacies of the design to the developers. None of the developers that I worked with on the project had much front-end experience so it was crucial for them to have a fully functioning prototype to refer to during implementation.

View full prototype.

During the holidays in late 2015 I had some extra time and decided to redesign the newly launched IoT service. What was launched did not fit into the AWS platform very well so I used the also recently launched Mobile Hub as a guide for the redesign. I decided to build an HTML protottype to keep up on my front-end engineering skills.

View full prototype.

In 2013 I worked as lead UX designer on Nike's mobile point of sale app. During that time I occasionally developed motion prototypes to weigh options and have discussions with the developers and stakeholders. The prototypes that you see here are micro interactions with adding an item to the cart and viewing how the total cost is calculated.

Mid 2015 our research team was very busy and I was unable to get a study picked up that I wanted to do for a soon-to-be-launched service that I designed the management console for. In turn, I took it upon myself to run the study and get the findings on my own. I have included the report in full below.

- Every participant was able to upload an application and run tests on it successfully

- Every participant was able to envision Device Farm augmenting there workflow

- Run reports contain the right data

- Recently uploaded selector was well received

- Screenshots are overbearing and should only be shown as-needed

- Device pool creation is confusing especially when rules are added

- No list of supported devices in the documentation

- The value of the fuzz-test isn’t immediately apparent

- All participants were surprised to see iOS devices after they had uploaded an Android application. Most said they expected the pool selection control to be “smarter”

- Half of the participants clicked on the compatibility table’s status icons expecting it to deselect incompatible devices

- The device compatibility alert was seen as a blocker: “I have to get to 100% compatibility before continuing”

- Most participants missed the part of the alert that said incompatible devices would be ignored during the run

- 2 participants started creating a rule based pool expecting it to filter the table and became confused when they couldn’t select or deselect devices

- Once understood, rules were seen as very powerful and could include lots of parameters. i.e. creating a pool for Samsung tablets with XHDPI displays running Android 4.2 - 4.4.4.

- “Type” was an unexpected label for the device's form factor.

- Most participants were interested in a device’s market share as one of their selection parameters. This may also be a desire to understand how devices are ranked within Device Farm.

- Number of devices in selected pool

- Device state info (fixture info?)

- More info about test scripts/package

- Every participant felt that the full-size screenshots were overwhelming and quickly became less than useful.

- Most participants suggested thumbnailing as a first resort and also suggested a light-box style interaction as ideal.

- Every participant said that displaying screenshots for every test all at once was unnecessary and they would be ok with having to “request” the screenshots.

- A few participants felt that there could be more data accompanying the screenshots so they could more easily correlate them with a specific log entry or time during the test.

- The ability to select device location from a map would be nice to have. It was also mentioned that it might be nice to have a random generator for fields that can be random such as device location

- One participant mentioned being able to set the upper and lower display limits on the performance graphs to have a more consistent experience viewing performance within a run

- One of our participants was very security aware and mentioned that some low-level application vulnerability checks as part of the testing process would be really helpful

- Being able to download a specific filtered subset of the logs would be helpful to have an output of a specific failure

- All participants felt that having 3rd party integrations would be crucial. Mentioned specifically were CodeDeploy and an Eclipse plugin. Mentioned less specifically was “integration with my build platform"

In late 2016 it became clear to me that a team that I was consulting for within AWS didn't really have an understanding of how their customer's were actually using their management console. I wanted to solve this by observing customers using the console in their own environments with their own accounts. This is a unique type of study and wasn't a very high priority for the research team so I took on the task of running the study by myself. I had some help from the research team to track down customers that were willing to share their data with us via screenshare. I have included the full report below.

- Participants liked that they can use the console to do things quickly

- Participants saw the console as something they could use to ‘set and forget’

- When used, the dashboard was seen as useful

- Participants had trouble coming up to speed on the service quickly

- The method flow view is confusing at first

- Request and response mapping configurations are hidden and sometimes confusing

- Lambda proxy integration was overlooked even though most participants would benefit from its us

- APIs being publicly accessible

- Lambda - Ability to see into and edit uploaded packages just like inline editing

- DDB - A query language/editor to see their data in the console

- Service menu - All participants made a point to call out how helpful the new search feature was and that it made their lives much easier

- Console home - Most participants called out the redesign and had positive things to say

- Console home - At least one participant mentioned that they wanted the ability to pin more services.

- Console home - At least one participant talked about wanting to have more control of what is displayed on the page to customize it to their workflow.